Evaluation, context and getting practical on data metrics: the Make Data Count Summit 2024

September 17, 2024 | By: Make Data CountThe Make Data Count Summit 2024 took place in London earlier this month. Over two days, we explored what areas of research infrastructure, practices, evidence and evaluation we need to focus on to advance data metrics and our understanding of the reach and impact of open data.

TestEach session covered a different aspect of data metrics and data evaluation, bringing together the expertise and perspectives of leaders from research, institutions, funders, and scholarly communications infrastructure, as well as insights by 125 attendees from all over the world.

Incorporating data evaluation into research assessment

The session ‘Policies that make data count’ explored data evaluation in funder processes and policy development with speakers Dagmar Meyer (European Research Council), Anna Diaz Font (Medical Research Foundation) and Maria Alejandra Tejada Gómez (Universidad Javeriana). Several funders allow data to be reported in grant applications and reports, but in practice, the challenges lie with identifying the right information that brings meaningful context about the usage of the data, and the resources needed to collect and evaluate that information. Our recognition that data evaluation is important needs to be matched by the investment in resources necessary to make it happen.

We also explored evaluation processes at institutions. Eric Perreault from Northwestern University urged universities to consider the goals that drive their assessment, articulate what they wish to measure, and align the data metrics they implement. Devika Madalli (INFLIBNET Centre) underscored this notion, inviting us to consider the purpose behind the practices institutions seek to foster, and how institutional policies serve those goals. Cameron Neylon (Curtin University & COKI) reflected that data sharing and reuse often takes place across data communities (rather than across disciplines), it is therefore useful to look between disciplinary communities to identify those where change is happening. Michael Dougherty (University of Maryland & HELIOS Open) shared that the University of Maryland has implemented annotated CVs where researchers can list different outputs, including datasets, and provide information about their rigor, reproducibility and impact. Reflecting on how institutions can advance change, Eric called out the importance of sharing examples where open data has moved specific communities forward; both Eric and Michael pointed to junior faculty as a key group to secure long-term change, given their active interest in reproducibility and open science.

Meaningful metrics: accounting for diverse datasets and the importance of context

A couple of sessions in the program looked at the nuances required to develop meaningful data metrics. Jean-Baptiste Poline (McGill University), Nick Juty (University of Manchester & ELIXIR) and Ivan Rodero (University of Utah) shared their experience working with decentralized and federated datasets. They touched upon considerations such as capacity and equity in relation to access and exploration of data, and the granularity of data e.g., where specific data files are accessed to combine them with others and create new aggregated datasets.

In the session ‘Building trust avoiding pitfalls’, Ludo Waltman (Centre for Science and Technology Studies (CWTS), Leiden University) facilitated a discussion around the challenges in transparency, rigor, and context for data metrics. Letitia Bracco (University of Lorraine & French Open Science Monitor) invited us to consider how the metrics used are relevant to what we seek to monitor. She talked about the Open Science Monitoring Initiative (OSMI) which is applying text mining to identify datasets in articles, collect associated information (e.g. repository name, license) and look for both implicit or explicit mentions to data. Alice Howarth shared how the UK Reproducibility Network is bringing together UK institutions and service providers to develop indicators that evaluate adoption of open science practices. Stephanie Haustein (University of Ottawa & Scholcommlab) called for evidence on data reuse practices and appropriate benchmarking as the foundation of meaningful data metrics.

Data metrics in practice

The Summit brought examples of the practical implementation of data metrics in different spheres of research activities. Guy Cochrane outlined how the Global Biodata Coalition regularly collects and reviews information about page visits, time spent on services, and data citations. Jose Benito Gonzalez Lopez noted that the repository Zenodo displays data citations, and provides standardized counts on views and downloads for datasets through the COUNTER Code of Practice for Research Data developed by Make Data Count. Graham Smith talked about Springer Nature’s partnership with OpenAIRE to expand the number of data citations captured in publications in their journals. The speakers highlighted that information is already being collected and shared that allows the community to gain insights into data usage, for example, by comparing trends year-on-year.

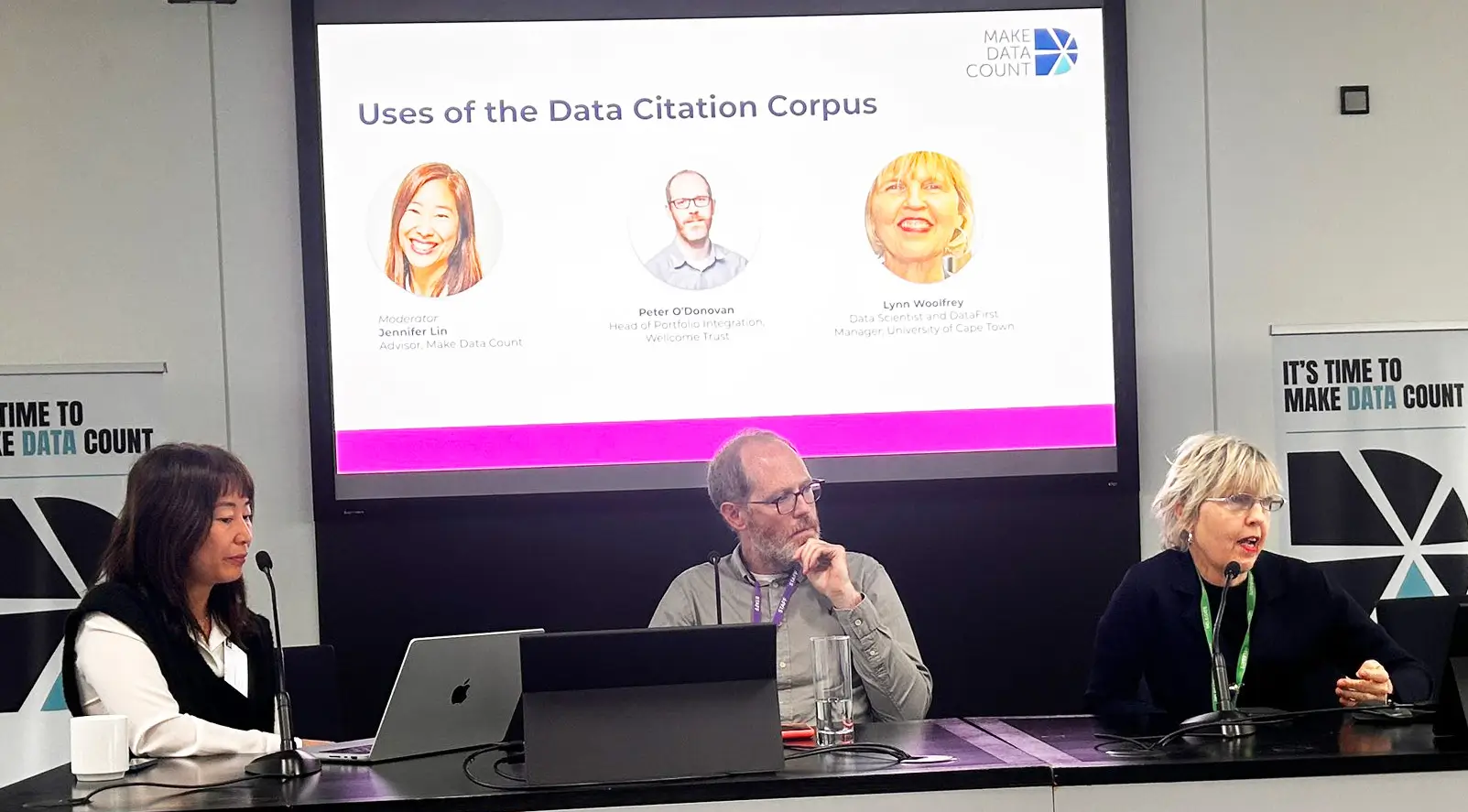

A discussion with Peter O’Donovan (Wellcome Trust) and Lynn Woolfrey (University of Cape Town) explored the insights that funders and institutions can already gain through the Data Citation Corpus. Peter noted the value of understanding the use of data as part of the evaluation of funded programs, and how the Data Citation Corpus could be combined with grant information funders collect. Lynn explained that the Corpus provides insights for repositories to showcase the impact of their data collections and surface gaps in expected usage; from an institutional perspective, the Corpus can inform discussions with funders and policy makers around the need for resourcing and infrastructure for data.

Priorities ahead

We also invited attendees to identify actionable items to make data metrics and data evaluation the norm, in breakout groups. Some of the key themes raised include:

- Create narratives to tell the success stories for researchers and communities who benefited from data reuse and impact

- Support ongoing initiatives such as the Data Citation Corpus or OSMI, and find synergies so that projects can complement and build on each other

- Develop Make Data Count guidance for the metadata required for data citations and other measures of data usage to feed into the development of data metrics

- Provide a catalog of resources for policies, evidence, guidance and events in this space

- Surface more data-article connections (while bearing in mind that this is not the only connection) and encourage journals to do more to support data citations

- Develop working groups to work through some of the topics raised and support institutions and researchers

- Foster global collaborations that include groups from diverse regions in policy development, training, and infrastructure for data metrics

The Summit opened up many areas for Make Data Count to take forward with the community in order to advance responsible metrics and evaluation for data. We thank all speakers and attendees for their engagement and contributions.

We’ll follow on the discussions around institutional processes with a working group focused on supporting institutions in their implementation of data evaluation practices. We are also pleased to share that we will have a dedicated Resources page in our upcoming redesigned website, which we will unveil soon! We will share more about our plans in the coming weeks, in the meantime, if you are interested in collaborating on any of the areas above, do get in touch!

| You can find the slides from the Make Data Count Summit 2024 at our Zenodo community: https://doi.org/10.5281/zenodo.13739400 |